prometheus可以拆分成多个节点进行指标收集。本文主要介绍CentOS7搭建Prometheus 监控Linux主机。

简介

prometheus可以拆分成多个节点进行指标收集。

安装环境:CentOS7

安装prometheus

wget -c https://github.com/prometheus/prometheus/releases/download/v2.23.0/prometheus-2.23.0.linux-amd64.tar.gz

tar zxvf prometheus-2.23.0.linux-amd64.tar.gz -C /opt/

cd /opt/

ln -s prometheus-2.23.0.linux-amd64 prometheus

cat > /etc/systemd/system/prometheus.service <<EOF

[Unit]

Description=prometheus

After=network.target

[Service]

Type=simple

WorkingDirectory=/opt/prometheus

ExecStart=/opt/prometheus/prometheus --config.file="/opt/prometheus/prometheus.yml"

LimitNOFILE=65536

PrivateTmp=true

RestartSec=2

StartLimitInterval=0

Restart=always

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable prometheus

systemctl start prometheus

配置Prometheus

这里配置的是监听/opt/prometheus/servers/目录下的json文件

cat > /opt/prometheus/prometheus.yml <<EOF

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'servers'

file_sd_configs:

- refresh_interval: 61s

files:

- /opt/prometheus/servers/*.json

EOF

systemctl restart prometheus

json格式

每个json文件需要是一个数组对象,如果不需要自定义标签,可以直接写到targets里面去也可以,可以有多个文件

[

{

"targets": [

"192.168.1.164:9100"

],

"labels": {

"instance": "192.168.1.164",

"job": "node_exporter"

}

},

{

"targets": [

"192.168.1.167:9100"

],

"labels": {

"instance": "192.168.1.167",

"job": "node_exporter"

}

}

]

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

安装node_exporter

安装到/opt/node_exporter路径下,保持默认的端口

https://github.com/prometheus/node_exporter/releases/download/v1.0.1/node_exporter-1.0.1.linux-amd64.tar.gz

tar zxvf node_exporter-1.0.1.linux-amd64.tar.gz -C /opt/

cd /opt/

ln -s node_exporter-1.0.1.linux-amd64 node_exporter

cat > /etc/systemd/system/node_exporter.service <<EOF

[Unit]

Description=node_exporter

After=network.target

[Service]

Type=simple

WorkingDirectory=/opt/node_exporter

ExecStart=/opt/node_exporter/node_exporter

LimitNOFILE=65536

PrivateTmp=true

RestartSec=2

StartLimitInterval=0

Restart=always

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable node_exporter

systemctl start node_exporter

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

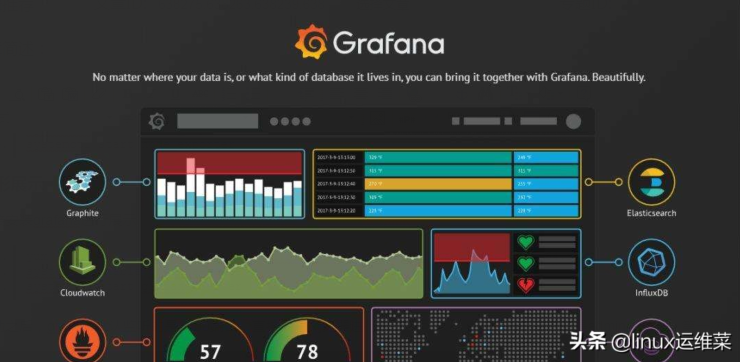

图形展示

直接安装grafana进行展示

yum -y install https://dl.grafana.com/oss/release/grafana-7.3.6-1.x86_64.rpm

systemctl enable grafana-server

systemctl start grafana-server

- 1.

- 2.

- 3.

启动之后,grafana默认监听的是3000端口,直接使用浏览器进行访问就可以了,默认用户名密码是admin/admin,第一次登陆之后会提示修改。

配置数据源:鼠标左边的菜单 Configuration -> Data Source -> Add data source -> 选择prometheus -> url那栏填入prometheus的地址就可以了 -> 最后 Save & test 就可以了。

grafana.com/grafana/dashboards 官网已经有人做好的模板,我们直接import进来就可以了。

导入面板:鼠标左边的菜单 Dashboards -> Import -> 填入id -> Load -> 选择数据源就可以了。

我经常用的是:1860 、8919 这两个来查看node_exporter监控

总结

安装这些服务都是使用systemd进行管理的,操作起来比较方便的。

这里没有设置告警,可以根据自己的需要设置对应的告警规则,使用alertmanager进行告警。

转自:https://os.51cto.com/article/639676.html