https://github.com/ultralytics/yolov5

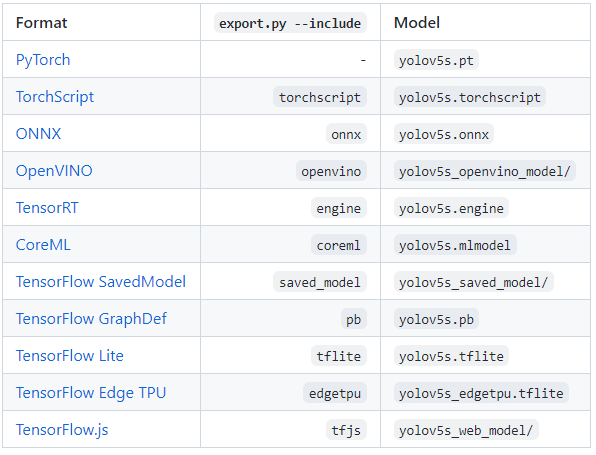

yolov5 release 6.1版本增加了TensorRT、Edge TPU和OpenVINO的支持,并提供了新的默认单周期线性LR调度器,以128批处理大小的再训练模型。YOLOv5现在正式支持11种不同的权重,不仅可以直接导出,还可以用于推理(detect.py和PyTorch Hub),以及在导出后对mAP配置文件和速度结果进行验证。

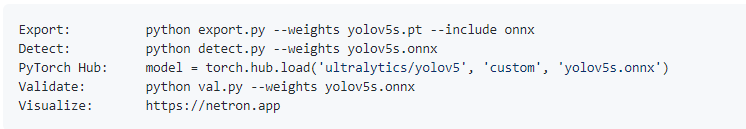

比如,onnx文件的导出:

onnx导出

onnx导出

1重大更新

-

TensorRT支持:TensorFlow, Keras, TFLite, TF.js模型导出现在完全集成使用

python export.py -include saved_model pb TFLite tfjs -

TensorFlow Edge TPU:新的更小的YOLOv5n(1.9M params)模型低于YOLOv5s(7.5M params),导出到2.1 MB INT8大小,理想的超轻边缘解决方案。

-

OpenVINO支持:YOLOv5 ONNX模型现在兼容OpenCV DNN和ONNX运行。

-

Export Benchmarks:使用

python utils/ Benchmark.py导出所有YOLOv5格式(mAP和速度)。目前在CPU上运行,未来的更新将实现GPU支持。 -

架构:无更改。

-

超参数:小更改。Yaml LRF从0.2降至0.1。

-

训练:默认学习速率(LR)调度器更新了一个周期的余弦替换为一个周期的线性,以改善结果。

新版模型导出

1、onnx

-

def export_onnx(model, im, file, opset, train, dynamic, simplify, prefix=colorstr(‘ONNX:’)):

-

# YOLOv5 ONNX export

-

try:

-

check_requirements((‘onnx’,))

-

import onnx

-

-

LOGGER.info(f‘\n{prefix} starting export with onnx {onnx.__version__}…’)

-

f = file.with_suffix(‘.onnx’)

-

-

torch.onnx.export(model, im, f, verbose=False, opset_version=opset,

-

training=torch.onnx.TrainingMode.TRAINING if train else torch.onnx.TrainingMode.EVAL,

-

do_constant_folding=not train,

-

input_names=[‘images’],

-

output_names=[‘output’],

-

dynamic_axes={‘images’: {0: ‘batch’, 2: ‘height’, 3: ‘width’}, # shape(1,3,640,640)

-

‘output’: {0: ‘batch’, 1: ‘anchors’} # shape(1,25200,85)

-

} if dynamic else None)

-

-

# Checks

-

model_onnx = onnx.load(f) # load onnx model

-

onnx.checker.check_model(model_onnx) # check onnx model

-

# LOGGER.info(onnx.helper.printable_graph(model_onnx.graph)) # print

-

-

# Simplify

-

if simplify:

-

try:

-

check_requirements((‘onnx-simplifier’,))

-

import onnxsim

-

-

LOGGER.info(f‘{prefix} simplifying with onnx-simplifier {onnxsim.__version__}…’)

-

model_onnx, check = onnxsim.simplify(

-

model_onnx,

-

dynamic_input_shape=dynamic,

-

input_shapes={‘images’: list(im.shape)} if dynamic else None)

-

assert check, ‘assert check failed’

-

onnx.save(model_onnx, f)

-

except Exception as e:

-

LOGGER.info(f‘{prefix} simplifier failure: {e}’)

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘{prefix} export failure: {e}’)

2、 openvino

-

def export_openvino(model, im, file, prefix=colorstr(‘OpenVINO:’)):

-

# YOLOv5 OpenVINO export

-

try:

-

check_requirements((‘openvino-dev’,)) # requires openvino-dev: https://pypi.org/project/openvino-dev/

-

import openvino.inference_engine as ie

-

-

LOGGER.info(f‘\n{prefix} starting export with openvino {ie.__version__}…’)

-

f = str(file).replace(‘.pt’, ‘_openvino_model’ + os.sep)

-

-

cmd = f“mo –input_model {file.with_suffix(‘.onnx’)} –output_dir {f}”

-

subprocess.check_output(cmd, shell=True)

-

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

3、 coreml

-

def export_coreml(model, im, file, prefix=colorstr(‘CoreML:’)):

-

# YOLOv5 CoreML export

-

try:

-

check_requirements((‘coremltools’,))

-

import coremltools as ct

-

-

LOGGER.info(f‘\n{prefix} starting export with coremltools {ct.__version__}…’)

-

f = file.with_suffix(‘.mlmodel’)

-

-

ts = torch.jit.trace(model, im, strict=False) # TorchScript model

-

ct_model = ct.convert(ts, inputs=[ct.ImageType(‘image’, shape=im.shape, scale=1 / 255, bias=[0, 0, 0])])

-

ct_model.save(f)

-

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return ct_model, f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

-

return None, None

4、TensorRT

-

def export_engine(model, im, file, train, half, simplify, workspace=4, verbose=False, prefix=colorstr(‘TensorRT:’)):

-

# YOLOv5 TensorRT export https://developer.nvidia.com/tensorrt

-

try:

-

check_requirements((‘tensorrt’,))

-

import tensorrt as trt

-

-

if trt.__version__[0] == ‘7’: # TensorRT 7 handling https://github.com/ultralytics/yolov5/issues/6012

-

grid = model.model[-1].anchor_grid

-

model.model[-1].anchor_grid = [a[…, :1, :1, :] for a in grid]

-

export_onnx(model, im, file, 12, train, False, simplify) # opset 12

-

model.model[-1].anchor_grid = grid

-

else: # TensorRT >= 8

-

check_version(trt.__version__, ‘8.0.0’, hard=True) # require tensorrt>=8.0.0

-

export_onnx(model, im, file, 13, train, False, simplify) # opset 13

-

onnx = file.with_suffix(‘.onnx’)

-

-

LOGGER.info(f‘\n{prefix} starting export with TensorRT {trt.__version__}…’)

-

assert im.device.type != ‘cpu’, ‘export running on CPU but must be on GPU, i.e. `python export.py –device 0`’

-

assert onnx.exists(), f‘failed to export ONNX file: {onnx}’

-

f = file.with_suffix(‘.engine’) # TensorRT engine file

-

logger = trt.Logger(trt.Logger.INFO)

-

if verbose:

-

logger.min_severity = trt.Logger.Severity.VERBOSE

-

-

builder = trt.Builder(logger)

-

config = builder.create_builder_config()

-

config.max_workspace_size = workspace * 1 << 30

-

-

flag = (1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))

-

network = builder.create_network(flag)

-

parser = trt.OnnxParser(network, logger)

-

if not parser.parse_from_file(str(onnx)):

-

raise RuntimeError(f‘failed to load ONNX file: {onnx}’)

-

-

inputs = [network.get_input(i) for i in range(network.num_inputs)]

-

outputs = [network.get_output(i) for i in range(network.num_outputs)]

-

LOGGER.info(f‘{prefix} Network Description:’)

-

for inp in inputs:

-

LOGGER.info(f‘{prefix}\tinput “{inp.name}” with shape {inp.shape} and dtype {inp.dtype}’)

-

for out in outputs:

-

LOGGER.info(f‘{prefix}\toutput “{out.name}” with shape {out.shape} and dtype {out.dtype}’)

-

-

half &= builder.platform_has_fast_fp16

-

LOGGER.info(f‘{prefix} building FP{16 if half else 32} engine in {f}’)

-

if half:

-

config.set_flag(trt.BuilderFlag.FP16)

-

with builder.build_engine(network, config) as engine, open(f, ‘wb’) as t:

-

t.write(engine.serialize())

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

5、TensorFlow

-

def export_pb(keras_model, im, file, prefix=colorstr(‘TensorFlow GraphDef:’)):

-

# YOLOv5 TensorFlow GraphDef *.pb export https://github.com/leimao/Frozen_Graph_TensorFlow

-

try:

-

import tensorflow as tf

-

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2

-

-

LOGGER.info(f‘\n{prefix} starting export with tensorflow {tf.__version__}…’)

-

f = file.with_suffix(‘.pb’)

-

-

m = tf.function(lambda x: keras_model(x)) # full model

-

m = m.get_concrete_function(tf.TensorSpec(keras_model.inputs[0].shape, keras_model.inputs[0].dtype))

-

frozen_func = convert_variables_to_constants_v2(m)

-

frozen_func.graph.as_graph_def()

-

tf.io.write_graph(graph_or_graph_def=frozen_func.graph, logdir=str(f.parent), name=f.name, as_text=False)

-

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

6、TensorFlow-Lite

-

def export_tflite(keras_model, im, file, int8, data, ncalib, prefix=colorstr(‘TensorFlow Lite:’)):

-

# YOLOv5 TensorFlow Lite export

-

try:

-

import tensorflow as tf

-

-

LOGGER.info(f‘\n{prefix} starting export with tensorflow {tf.__version__}…’)

-

batch_size, ch, *imgsz = list(im.shape) # BCHW

-

f = str(file).replace(‘.pt’, ‘-fp16.tflite’)

-

-

converter = tf.lite.TFLiteConverter.from_keras_model(keras_model)

-

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS]

-

converter.target_spec.supported_types = [tf.float16]

-

converter.optimizations = [tf.lite.Optimize.DEFAULT]

-

if int8:

-

from models.tf import representative_dataset_gen

-

dataset = LoadImages(check_dataset(data)[‘train’], img_size=imgsz, auto=False) # representative data

-

converter.representative_dataset = lambda: representative_dataset_gen(dataset, ncalib)

-

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

-

converter.target_spec.supported_types = []

-

converter.inference_input_type = tf.uint8 # or tf.int8

-

converter.inference_output_type = tf.uint8 # or tf.int8

-

converter.experimental_new_quantizer = False

-

f = str(file).replace(‘.pt’, ‘-int8.tflite’)

-

-

tflite_model = converter.convert()

-

open(f, “wb”).write(tflite_model)

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

7、Egde TPU

-

def export_edgetpu(keras_model, im, file, prefix=colorstr(‘Edge TPU:’)):

-

# YOLOv5 Edge TPU export https://coral.ai/docs/edgetpu/models-intro/

-

try:

-

cmd = ‘edgetpu_compiler –version’

-

help_url = ‘https://coral.ai/docs/edgetpu/compiler/’

-

assert platform.system() == ‘Linux’, f‘export only supported on Linux. See {help_url}’

-

if subprocess.run(cmd + ‘ >/dev/null’, shell=True).returncode != 0:

-

LOGGER.info(f‘\n{prefix} export requires Edge TPU compiler. Attempting install from {help_url}’)

-

sudo = subprocess.run(‘sudo –version >/dev/null’, shell=True).returncode == 0 # sudo installed on system

-

for c in [‘curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -‘,

-

‘echo “deb https://packages.cloud.google.com/apt coral-edgetpu-stable main” | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list’,

-

‘sudo apt-get update’,

-

‘sudo apt-get install edgetpu-compiler’]:

-

subprocess.run(c if sudo else c.replace(‘sudo ‘, ”), shell=True, check=True)

-

ver = subprocess.run(cmd, shell=True, capture_output=True, check=True).stdout.decode().split()[-1]

-

-

LOGGER.info(f‘\n{prefix} starting export with Edge TPU compiler {ver}…’)

-

f = str(file).replace(‘.pt’, ‘-int8_edgetpu.tflite’) # Edge TPU model

-

f_tfl = str(file).replace(‘.pt’, ‘-int8.tflite’) # TFLite model

-

-

cmd = f“edgetpu_compiler -s {f_tfl}”

-

subprocess.run(cmd, shell=True, check=True)

-

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

8、TensorFlow.js

-

def export_tfjs(keras_model, im, file, prefix=colorstr(‘TensorFlow.js:’)):

-

# YOLOv5 TensorFlow.js export

-

try:

-

check_requirements((‘tensorflowjs’,))

-

import re

-

-

import tensorflowjs as tfjs

-

-

LOGGER.info(f‘\n{prefix} starting export with tensorflowjs {tfjs.__version__}…’)

-

f = str(file).replace(‘.pt’, ‘_web_model’) # js dir

-

f_pb = file.with_suffix(‘.pb’) # *.pb path

-

f_json = f + ‘/model.json’ # *.json path

-

-

cmd = f‘tensorflowjs_converter –input_format=tf_frozen_model ‘ \

-

f‘–output_node_names=”Identity,Identity_1,Identity_2,Identity_3″ {f_pb} {f}’

-

subprocess.run(cmd, shell=True)

-

-

json = open(f_json).read()

-

with open(f_json, ‘w’) as j: # sort JSON Identity_* in ascending order

-

subst = re.sub(

-

r‘{“outputs”: {“Identity.?.?”: {“name”: “Identity.?.?”}, ‘

-

r‘”Identity.?.?”: {“name”: “Identity.?.?”}, ‘

-

r‘”Identity.?.?”: {“name”: “Identity.?.?”}, ‘

-

r‘”Identity.?.?”: {“name”: “Identity.?.?”}}}’,

-

r‘{“outputs”: {“Identity”: {“name”: “Identity”}, ‘

-

r‘”Identity_1″: {“name”: “Identity_1”}, ‘

-

r‘”Identity_2″: {“name”: “Identity_2”}, ‘

-

r‘”Identity_3″: {“name”: “Identity_3”}}}’,

-

json)

-

j.write(subst)

-

-

LOGGER.info(f‘{prefix} export success, saved as {f} ({file_size(f):.1f} MB)’)

-

return f

-

except Exception as e:

-

LOGGER.info(f‘\n{prefix} export failure: {e}’)

新版检测推理

-

python path/to/detect.py –weights yolov5s.pt # PyTorch

-

yolov5s.torchscript # TorchScript

-

yolov5s.onnx # ONNX Runtime or OpenCV DNN with –dnn

-

yolov5s.xml # OpenVINO

-

yolov5s.engine # TensorRT

-

yolov5s.mlmodel # CoreML (MacOS-only)

-

yolov5s_saved_model # TensorFlow SavedModel

-

yolov5s.pb # TensorFlow GraphDef

-

yolov5s.tflite # TensorFlow Lite

-

yolov5s_edgetpu.tflite # TensorFlow Edge TPU

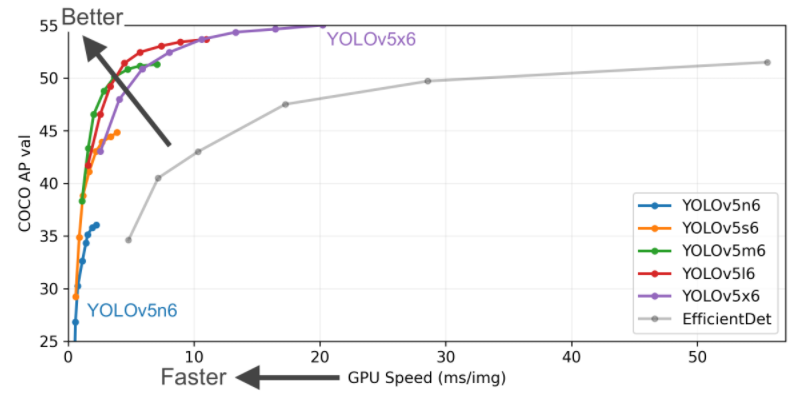

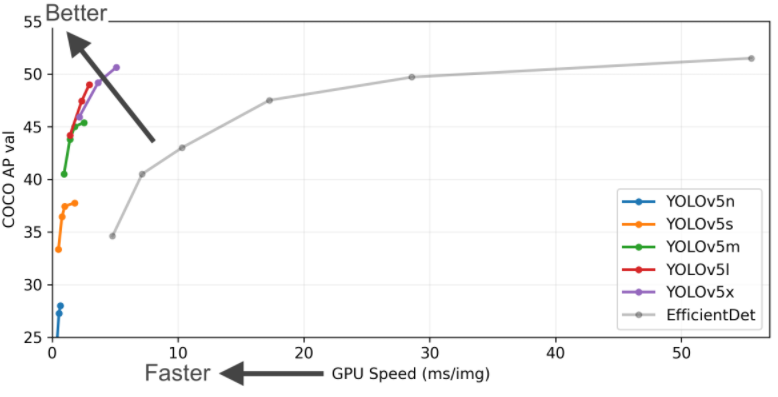

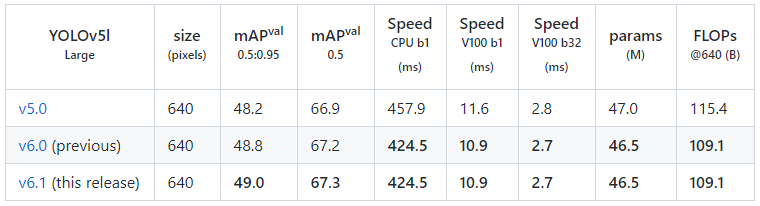

2最新结果

3与6.0版本的精度对比

-

ICCV和CVPR 2021论文和代码下载

-

-

后台回复:CVPR2021,即可下载CVPR 2021论文和代码开源的论文合集

-

-

后台回复:ICCV2021,即可下载ICCV 2021论文和代码开源的论文合集

-

-

后台回复:Transformer综述,即可下载最新的3篇Transformer综述PDF

-

CVer-目标检测交流群成立

-

扫码添加CVer助手,可申请加入CVer-目标检测 微信交流群,方向已涵盖:目标检测、图像分割、目标跟踪、人脸检测&识别、OCR、姿态估计、超分辨率、SLAM、医疗影像、Re-ID、GAN、NAS、深度估计、自动驾驶、强化学习、车道线检测、模型剪枝&压缩、去噪、去雾、去雨、风格迁移、遥感图像、行为识别、视频理解、图像融合、图像检索、论文投稿&交流、PyTorch和TensorFlow等群。

-

一定要备注:研究方向+地点+学校/公司+昵称(如目标检测+上海+上交+卡卡),根据格式备注,可更快被通过且邀请进群

-

-

▲长按加小助手微信,进交流群

-

CVer学术交流群(知识星球)来了!想要了解最新最快最好的CV/DL/ML论文速递、优质开源项目、学习教程和实战训练等资料,欢迎扫描下方二维码,加入CVer学术交流群,已汇集数千人!

-

-

▲扫码进群

-

▲点击上方卡片,关注CVer公众号

-

-

整理不易,请点赞和在看